Intro

"So, what would happen if we replaced floating point numbers with rational numbers and tried to render an image?"

This was the question I posed to myself after some pondering around a tweet by Morgan McGuire, a computer graphics researcher and educator. He was reflecting on how computer science students get surprised when they first learn that the floating point numbers in our current computers have precision tradeoffs that make simple things like checking whether four points are coplanar difficult. In theory one can check for coplanarity through a determinant, or equivalently some cross and dot products. If this operation results in zero, the four points are coplanar. The problem of course is that, due to the constant truncation of floating operations, the results will never be exact and a zero will never be produced in practice. So, checking the coplanarity of four points is technically impossible, unless we accept some thresholding.

This is common knowledge to any seasoned computer graphics aficionado like myself, but it sparkled the following thought - if all the input data to a renderer (vertex coordinates, 3D transformations, etc) was given as rational numbers instead of floating point numbers, then all operations from ray generation, to acceleration structure traversal, to the ray-triangle intersection could be carried in rational numbers too, to eventually produce intersection points expressed as rational coordinates. Then, one should be able to compute coplanarity exactly, for rational numbers express quantities exactly, never as an infinite series of decimals that need truncation. This is because rational numbers are expressed as the ratio of two integers, and integers support exact arithmetic, so our coplanarity tests would be exact!

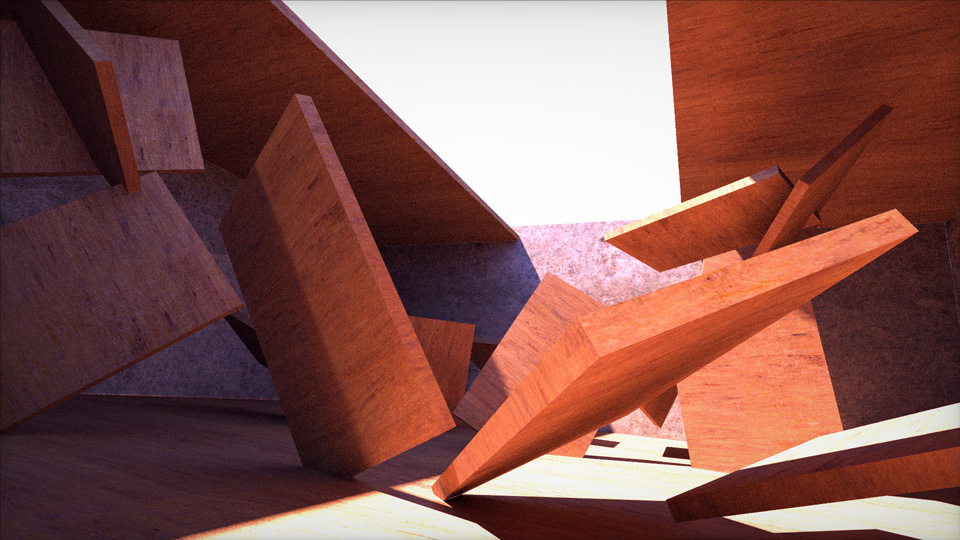

Pathtracing using rational numbers in the "floating bar" format invented for this article, instead of the usual floating point numbers

But for those in need of a refresher, let's review rational numbers. As said, a rational number is one that can be expressed as the ratio of two integers, such as 1/2 or 355/113. Rational numbers can be added, subtracted, multiplied and divided to produce rational numbers again. Formally, a mathematician would say that a set of rational numbers forms a field, which implies it's closed under the four basic arithmetic operations, which means again that rational numbers combine to produce rational numbers only. In other words, rational numbers will never "leak" into the territory of infinite decimals, imaginary numbers, or other funny things. These are the numbers Pythagoras found pure and perfect. We don't, although we find them useful in some applications; and my hope was that they would be helpful for what we could call "Exact Rendering" too.

Now, the typical rendering operations, from ray generation, to bounding box tests during BVH traversal, to ray-triangle intersections, ray reflections for shading, etc, are all based on cross and dot products and scalar multiplications, including all coordinate transformations and matrix inverses and quaternion logic. But implementing cross and dot products in 3D engines is usually done based on the four basic arithmetic operations of addition, subtraction, multiplication and division. So that means that in a world of rational arithmetic, cross and dot products will also be computed exactly without truncations. I could of course already foresee problems with the size of the rational numbers, but from a theoretical standpoint, they idea of producing mathematically exact renders seemed sound in principle.

Or almost sound; there are a few operations that fall outside of the rational field. These are the trigonometric functions (sin, cos, atan) and also square root. For the former, I've always expressed my opinion that trigonometry is to be only in the outer layers of an engine, close to the user input, and that if you find yourself doing trigonometry in the inner parts of your renderer, you are most probably doing things wrong (and have shown how to fix one of the most typical examples). In short, it is very easy to build a trigonometry-free engine. Doing trigonometry in rationals also doesn't seem too crazy, if the need really was there, anyways.

As for square roots, I think that except for conics sections (spheres, cylinders, etc) and actual shading/brdf-ing/coloring, one doesn't really need to normalize the rays and surface normals as often as we usually tend to. There’s this common practice among programmers of normalizing every single direction vector, “just in case”. In reality, it’s very rarely necessary. Ray generation certainly doesn’t need it - ray direction need not be normalized for BVH traversal or ray-triangle intersection to function. Reflections don’t need it either. I can barely think of anything that needs it, other than shading.

All this to say, that I was very confident I could write a renderer without square roots or trigonometry calls, and if fully implemented with rational numbers then I should be getting mathematically exact renders, and perform including unambiguous coplanarity tests on top of it if so desired.

This article is the story of a two nights exploration I conducted trying to evaluate how feasible the idea was, the multiple things that I learnt and invented along the way, and the few surprises I found. It's written in more or less chronological order as things unfolded, and in a rather informal way. Now, the image above could be seen as a bit of a spoiler, but read until the end of the article because I bring you both good and bad news.

Setup

The first thing I did was to implement a bare minimal tracer in Shadertoy for a super simple scene composed of a plane, a sphere, a box and a triangle - the building blocks of a real renderer. Then I copy+pasted the code to a C++ file and after a couple minor tweaks I was up and running. That gave me my traced image rendered in the CPU with regular IEEE754 floating point numbers, for reference. I also removed all ray normalizations in the tracing code, since none of them were really needed as discussed earlier. As a reminder, normalization involves a square root and rational numbers are not closed under rooting (the square root of a rational number is seldom a rational number). And while later on we'll see that we can of course still do square roots, I wanted to keep the code as mathematically pure as possible to see how far could I go with exact, rounding-free arithmetic of rational numbers.

The last preparation step was to take all the vec3, mat4x4 and other basic algebra/math classes and modify them to use "rational" instead of "float". Since my "rational" struct overloaded all common operators (add, sub, mul, div, negation, comparisons, etc) the change went smoothly. I quickly implemented the rest of the usual operations (abs, sign, mod, fract, floor, sqrt, etc), which in theory was all I really needed to do in order to get pretty rational renders.

// a 65 bit rational number

struct rational

{

bool s; // sign

uint32_t n; // numerator

uint32_t d; // denominator

};

bool operator==( rational const & a, rational const & b );

bool operator< ( rational const & a, rational const & b );

bool operator> ( rational const & a, rational const & b );

bool operator< ( rational const & a, int const & b );

bool operator> ( rational const & a, int const & b );

rational operator*( rational const & a, rational const & b );

rational operator+( rational const & a, rational const & b );

rational operator/( rational const & a, rational const & b );

rational operator-( rational const & a, rational const & b );

rational floor( const rational & a );

rational fract( const rational & a );

rational min( const rational & a, const rational &b );

rational max( const rational & a, const rational &b );

rational abs( const rational & a );

rational sqrt( const rational & a ); // not exact

Test 1 - the naive thing

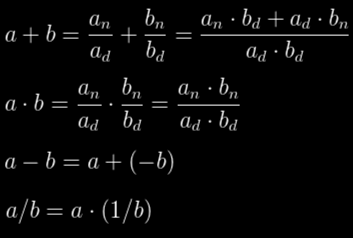

But let's see how this initial implementation was. I always try the simplest thing first, see how that goes. And the simplest way to implement rationals is to use two integers, a numerator N and a denominator D that produce a value x = N/D. I chose to enforce both numerator and denominator to be positive, and I stored the sign of the number in a separated bit, to simplify things and remove some ambiguities. Easy for a first attempt. Let's see what the four basic arithmetic operations look like in this rational form.

First, note the subtraction of a - b is just the addition of a and the additive inverse of b, ie, a + (-b), where -b can be computed simply by flipping the sign bit of b. Similarly, performing the division a/b is the same as doing the multiplication of a and the (multiplicative) inverse of b. Or in other words, a/b = a · (1/b), where (1/b) can be computed by simply swapping the numerator bn and denominator bd of b. So, this is the first interesting property of rational arithmetic - divisions and multiplications have the same cost, so unlike in regular floating point rendering where division are usually avoided or delayed or hidden under the latency of slow texture fetches, in rational arithmetic there's no need to fear them.

Ok, so it's clear we just need to implement addition and multiplication and division and subtraction will follow from there:

The first thing to note is that addition is very expensive, and can easily overflow the word size. Multiplication is similar. Other things to note - the propagation of the sign for the multiplication is just an xor, since two positives do a positive and so do two negatives. The propagation of the sign for the addition is more tricky and I implemented it quickly with three branches (the addition is trivial if both signs of a and b agree, but when they don't you have to pick the smallest of the two and subtract it from the other - I won't bother with the small implementation details here anymore, I'll put my source code somewhere).

I will also skip the implementation of the fract() and floor(), which you'll find easy and beautiful if you try. The comparison operators deserve some care as well. Once signs have been taken care of and assuming a and b are positive, then

The important thing to note here is that even comparison require us to do a couple of multiplications and potentially need to promote things to the next word size, and this will become important in a bit later in this article.

Lastly, we'll consider square roots later in its own dedicated section as well, knowing that for the most part we don't really need them (except for the sphere in this initial test).

For now, this naive implementation was sufficient to launch my first render of the plane+sphere+triangle+box test scene and tracer, and see how it would go. Note I was very generous for this first test and used 65 bit rationals (made of 32 bit for numerator, 32 for denominator and 1 for sign), which is really a lot of data, comparable to a "double" data type in C. The left image is what I got with this naive implementation, and on the right the reference image with regular floating point numbers (32 bit):

Naive 65 bit rationals

Floating point reference

The problem was with each rendering operation (dot, add, cross, etc, the numerator and denominator of my rational numbers were getting bigger and bigger without control. Remember than even rational addition requires multiplication of integers, which can only grow and grow. Imagine the following: assume our original world units were meters and that we were snapping our source geometry (vertices and camera) to a millimeter accuracy. Then, the source data for the scene alone would be on the 18 bits usage already. For a rather small scene. At the same time, for a typical HD screen with 4X antialiasing, the ray direction rationals need 12 bits. So, as soon as the first ray and geometry interaction happened, just on the very first arithmetic instruction that combined our ray and a camera or object transform, the numbers would become 18 + 12 = 30 bits long. This is close to overflowing what my 32 bit numerator and denominator! So basically, even before I had completed my very first dot or cross product, I was already at the verge of catastrophic errors. By the time the cross product was completed the renderer would already be needing rationals of hundreds of bits in order to represent the numbers properly. That's the worst case of course, but the average case was not far from that, no wonder I was barely seeing anything, except for the floor plane a bit and part of the sphere.

Test 2 - GCD reduction

One solution would be to use some BIGNUM library for infinite precision. But instead, I opted for something different, based on the following observation: different rational numbers can represent the same quantity. Indeed 6/12 is the same value as 1/2, yet it uses a lot more bits. So the idea was this - if after each basic arithmetic operation (or before it) I extracted all the common factors from the numerator and denominator and reduced the fraction to its simplest form, then maybe I could keep things under control and keep operating with exact arithmetic and without losing any precision for a longer time. Maybe long enough to get clean rendered images?

So, I'll take a small break here to give one more example: 588/910 can be simplified to 42/65, since the 14 divides both 588 and 910. The number 2 also divides 588 and 910, but we want to find the largest number that divides both, so we can save the maximum possible number of bits. Finding the largest number that divides two other numbers simultaneously can be done with the Great Common Divisor (GCD) algorithm, of which there are efficient forms everywhere. In my case I copied directly from the Wikipedia article on the GCD, and sped it up a little by doing the bit scan step using x64 intrinsics.

So, armed with the GCD, I extended my "rational" class to constantly keep simplifying my fractions as they were being operated on, that is, inside the implementation of the add and multiply operators. Now, there are two ways I could have done that:

This first one was to promote the intermediary result of the addition and multiplication operators to the next worth length (uin64_t in the case of the current 32 bit numerator/denominator approach), perform the GCD in that wider data type, and then demote the result to the source bit length (32 bits). The second approach was to analyze how an, ad, bn and bd combine with each other in both arithmetic operators, and extract common factors across them before performing the multiplications. This second approach prevents in principle the need for promoting to 64 bit arithmetic. Knowing that doing this was possible and could always go back to it, I chose implementing the first method instead because it was quicker to implement, allowing me to move faster (the night is short). With the GCD implemented in the add and multiply operators, let's see what kind of render I was able to produce:

65 bit rationals with GCD reduction

Floating point reference

Before going on my next step in the quest of making rational numbers actually viable, I want to take a break and do a little detour. Because even though the GCD had helped a lot, I still felt I was wasting bit-space. After all, the GCD was taking care of numbers such as 1000/2000 and reducing them to 1/2. But that means, that from all possible combinations of the 65 bits in my rational number representation, many were "invalid" or "wasted" combinations. Such as 1000/2000. My intuition was that most of the space must have been wasted. But was I right? How much bit space was I actually wasting?

Detour - on coprime numbers

Below are two pictures, the first show the bit usage for rational numbers of 5+5 bits (5 bit numerator and 5 bit denominator) and the second one shows the bit usage for rationals of 7+7 bits. The horizontal and vertical axis of the graphs represent the values of the numerator and denominator, for all possible numerators and denominators (up to 31 and 127 respectively). The black pixels are the unused combinations and the white pixels are valid fractions (those that cannot be simplified). For example, the diagonal is all black except for the 1/1 pixel, since all fractions of the form n/n can be reduced to 1/1.

bit usage for 5/5 rationals

bit usage for 7/7 rationals

In any case, it seemed I was wasting about 40% of the bit combinations in my rational representation, which while it felt like a lot... But only until I decided to look at it as actually being less than one bit... which no longer felt terrible (not show-stopper bad). With that in mind, I decided to move forward with other completely different approaches instead of trying to locally optimize this one issue. I did, however, do some rapid learning on Stern-Brocot and Calkin-Wilf trees, which would have allowed me to make full use of all the available bits, but the range of values I could get with them is really tiny, so I discarded the idea quickly and moved on. I think at this point I should formally credit Wikipedia for being a constant source of learning.

Okey, so let's recap where I was at this point in the night: I could render some broken images and there was some hope, but I was at the mercy of the incursion of prime numbers in the computations. Those little primes controlled when the GCD algorithm could or cold not simplify fractions. Specially large primes were dangerous - as soon as one made it into either the numerator or denominator, it would stay in there "polluting" the rational number and making it larger, with little hopes of appearing again in the denominator or numerator for the GCD to simplify. Statistically speaking, I felt my rationals were guaranteed to eventually explode; it was just a matter of how early or late.

To make things worse, I was using 65 bits for my rationals (1+32+32), twice as many bits as a standard floating point number, and still getting rather broken renders. I of course tried to use 1+15+16 rationals and check what quality I was getting for a "reasonable" data size, but the current numerator+denominator+GCD approach produced images that where just unrecognizable.

Test 3 - rational normalization

So now I was at a place where I needed to do something dramatic. It seemed like I needed to start chopping numbers and losing precision if I wanted to prevent the numbers from growing without limits. The whole exercise started from the idea of exploring exact rendering, but at this point I felt I had to give up on that idea and keep exploring elsewhere, if only for fun, and see where I would land (the original idea that kicks a research process is just that, an idea to kick a process, and often you land in totally unexpected places. Or as John Cleese once said, wrong ideas can lead you to good ideas, the creative process does not need to be a sequence or progression of logically correct steps at all time).

Anyways, I decided to see what I'd happen to the renders if I somehow managed to prevent numerator and denominator from overflowing. The simplest thing to do was to shift both numerator and denominator enough bits to the right, when necessary, until they fitted in the assigned bit space. This effectively does an integer division by a power of two both in numerator and denominator by the same amount, and hence the value of the rational stays approximately unchanged (if both numerator and denominator are large, which possibly are given we are in a near-overflow situation).

My first approach was simple - I looked at the number of bits needed by the numerator and the denominator, took the maximum, and shifted both by that amount of bits (making sure I was rounding to the nearest integer when doing so). When this was implemented both in the addition and multiplication operators, things started to look visually reasonable:

65 bit rationals with GCD reduction and normalization

Floating point reference

Test 4 - floating bar

At this point I decided to switch gears and stop making excuses - if I was going to find anything interesting around using rational numbers for rendering, it better be exactly 32 bits total and no more. I rather get some good idea or stop there and call it a night (well, two nights, since this was the beginning of my second session).

My first thought was that I could stick to the GCD and renormalizations ideas, but I needed to be smarter about the storage and bit utilization. The first thing that came to mind was that, even though numerator and denominator can get big, often they don't. Or at least not simultaneously. So, at the times the numerator is small, the denominator should be allowed to be big. And vice-versa. The unused bits of one of the two integers could be used by the other to express bigger values, as needed. Then I realized that the same way a floating point number is pretty much a fixed point format with the "fixed" point made variable, I could take my rational numbers and make the location of the bar of the fraction variable as well. Meaning, instead of hardcoding it to be 16+16, I could let the same 32bit variable sometimes be 16+16 but also some other times be 5+27, or 13+19, as needed.

It was worth trying. A few lines of packing/depacking code in the internal setters and getters for numerator and denominator was fast to code anyways. The most logical layout to try, I though, was 1|5|26, meaning:

1 bit : sign

5 bits: bar position (B)

26 bits: numerator and denominator data merged, numerator high 26-B bits, denominator low B bits

where the bar position (B) determines the size of the denominator. For example, the number 7/3 would in principle be

7/3 = 0 00010 000000000000000000000111 11

where the sign is 0 for "positive", the bar is "2" to indicate the the denominator (the number 3) needs 2 bits to be represented and that the rest of the bits are left for the numerator.

Now, the readers that have worked with the IEEE754 standard might find the following observation familiar: the binary representation of the denominator will always start with "1", for the bar number will always chop it to its shortest representation. That means that the first bit of the denominator needs not be stored. In this case, the number "3" can be represented just by the binary value "1" and a bar value of "1":

7/3 = 0 00001 0000000000000000000000111 1

This trick not only saved me one precious bit, but it also came with a beautiful side effect: when the bar is set to zero, it naturally and simultaneously implies that the denominator is 1 and that no room is needed to store it. In turn that means that my rational representation of numbers was suddenly fully compatible with regular integer representation and arithmetic as long as the numbers stayed below 226, which is a pretty decent number. Such a nice surprise! So in theory I could use the exact same data type, "rational", to perform the common rendering and shading operations but also do all the logic and control flow tasks in the path tracer - I'd no longer need to have two data types like in most renderers ("int" and "float") and convert back and forth! However the clock was ticking, so I did not change all my loop indices from "int" to "rational". The night was running short and I still had multiple things I wanted to try.

But first, let's look at what I could get using my new full 32 bit (1|5|26) Floating Bar rational numbers:

32 bit (1|5|26) floating bar rationals

32 bit floating point reference

Ohhh, not bad! I still had some artifacts in the sphere, but I decided I'd blame my poor square root implementation rather than the Floating Bar numbers themselves. On the other hand, the box and the triangle were really cleanly rendered.

Also note that the number of exactly resolved pixels per image went up thanks to the this adaptive bit allocation technique. I think that by allowing larger numbers to exist before reaching overflows in denominator or numerator, I increased the chances of the GCD to find common factors to reduce. And so, the floating bar not only increased the range of the representable numbers and delayed overflows driven lossy normalizations, but also got an extra kick of quality improvements and number of mathematically exact pixels!

A bigger challenge

At this point I was ready to try a bigger test (although still a toy - nothing close to production). I implemented a bare minimum pathtracer (not necessarily physically accurate or even physically motivated) and set a scene with some boxes and two light sources with a reference GPU implementation that you can find here: https://www.shadertoy.com/view/Xd2fzR.

I converted the tracer code and scene to C++ again, removed some unnecessary ray normalizations once more, and launched the render with my new Rational Bar based rational numbers. This is what I got:

32 bit floating bar rationals

32 bit floating point reference

Okey, now this was really not bad! I was really excited, but under the magnifying glass, I could clearly see some light leaks at the corners where the edges of the floor and ceiling meet. Here are some close ups showing the errors:

These could be due to a problem in my ray-box intersection implementation that only manifested in rationals, I wouldn't be surprised. Or maybe I was simply hitting the limits of what rationals can do. Anyway, I was pretty happy. And also I had other developments or experiments I wanted to try or think about in the short time left:

Some other experiments

Exact Arithmetic in 64 bit

After the success of 32 bit (1|5|26) Floating Bar rationals, I decided to try 64 bits and see if I could get all pixels of the screen to be exact. So I implemented 1|6|57 rationals quickly, although first I had to learn some more cool x64 intrinsics for bit shifting and 128bit integer arithmetic. These 57 bits of numerator/denominator allowed for a much bigger range of distances to be traced. And indeed, for simple scenes with a few triangles (not the path-traced scene above), I could get exact results. This was a success!However, ironically enough, the coplanarity test that I implemented in order to test the correctness required a few dot and cross products, which made the numbers start renormalizing themselves :) So, while I knew the render was exact (no breakpoint was hit in the renormalization code), I couldn't "prove it" mathematically. Heh. Well, all it means is that 64 bits was okey for a few triangles but wouldn't hold for more complex scenes anyways.

Square roots

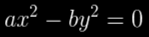

Square roots. This is a topic that I decided to stop a bit and learn new things this my second and last night of research. I wanted to implement the best possible square rooter for rationals. My current naive approach, which is bad, was to take the integer square root of the numerator (with proper rounding) and then do the same with the denominator. Since the square root of a fraction is the fraction of the square roots of its numerator and denominator, overall this approach returns something okeish that is not too far from the best possible answer. But it definitely does not produce the best rational approximation to the square root of a rational number. Intuitively, it is performing two approximations instead of a single one.What I tried is the following: what we really are after here are two integers x and y such that

We can square both sides and move terms around, and rewrite our problem to be that of finding the (non trivial) solution to the following diophantine equation (diophantine means that we are only interested in integer solutions):

After some online Wikipedia browsing I found that this particular equation is a so-called "Modified Pell's" equation, or "Pell's Like" equation. There are algorithms to find the smallest values of x and y that fulfill the equation. Unfortunately I fell for the Wikipedia rabbit hole, and didn't find the time and energy to try implementing any of it.

More efficient reduction

In my last minutes before my night and hence the experiment was over, I thought of trying to take advantage of the multiple terms that combine together in complex geometrical operators such as the cross product. For example, the first component of a cross product is

assuming sy=a/b, tz=c/d, ty=e/f, sz=g/h

This meant that now I could try finding common factors between a and d, or e and h, for example, and use them for early reductions.

Another idea I had was that if at some point rendering speed was a concern, I could try skipping the GCD steps altogether and only implement normalization. A quick test proved that doing so still produces reasonable renders and works just fine at a much faster speed. It does come with less number of arithmetically exact results though, of course.

However, a compromise could be to not implement a generic GCD routine or circuitry, but just something mathematically simple, hardcoded and efficient that finds divisibility by 2, 3 and 5 only. While not an exhaustive factorization, it should be able to catch a great amount of reductions in practice. Think that divisibility by 2 is three times more common than divisibility by 7, and twenty times more common than divisibility by 41!

Conclusion

After this exercise I ended up believing that there's perhaps room for a number representation that is based on rational numbers similar to what I am here calling "Floating Bar", a representation that is compatible with integers and can do many operations in exact arithmetic for many problems. The 64 bit version (1|6|57) can go a long way, although the 32 bit version (1|5|26) can already produce some interesting renderings.

If this hadn't been a two nights experiment but something made at work at a studio or a company, some possible next steps would have been:

* Get an histogram of number of exactly traced pixels vs not (or in other words, how frequently normalization happens and where)

* Try the hardcoded 2, 3 and 5 factor reduction, and measure percentage of lost exact pixels

* Show pixel difference between floating point rendering and float bar rendering

* Find creative ways to express inf and nan

* Implement the actual detection of nan, inf, underflow and overflow

Overall, this was a fun exploration. There were a few surprises on the way, a small invention, and lots of learning about Pell's equation, square roots, GCD, x86_64 intrinsics, Riemann Zeta function, and some other things. I'm pretty happy with it. I hope you too enoyed the reading!